DARN

I hacked 1023 servers in 3 minutes at ICHack 2026. Then, I ran LLM's on them.

I'm going to preface with this. This project is a bit of fun, and is not meant to be taken seriously. Doing what is outlined in this blog post is ethicly questionable, and as such needs to be done with sufficient guardrails. Do not try this at home!

I have recently been captivated by human extremes, specifically the arctic and the antarctic. I find it amazing that we've managed to set up bases and research stations in some of the most inhospitable places on Earth, and that we have people living and working there for extended periods of time. This train of thought led me on to some research, could I ping a server in antarctica?

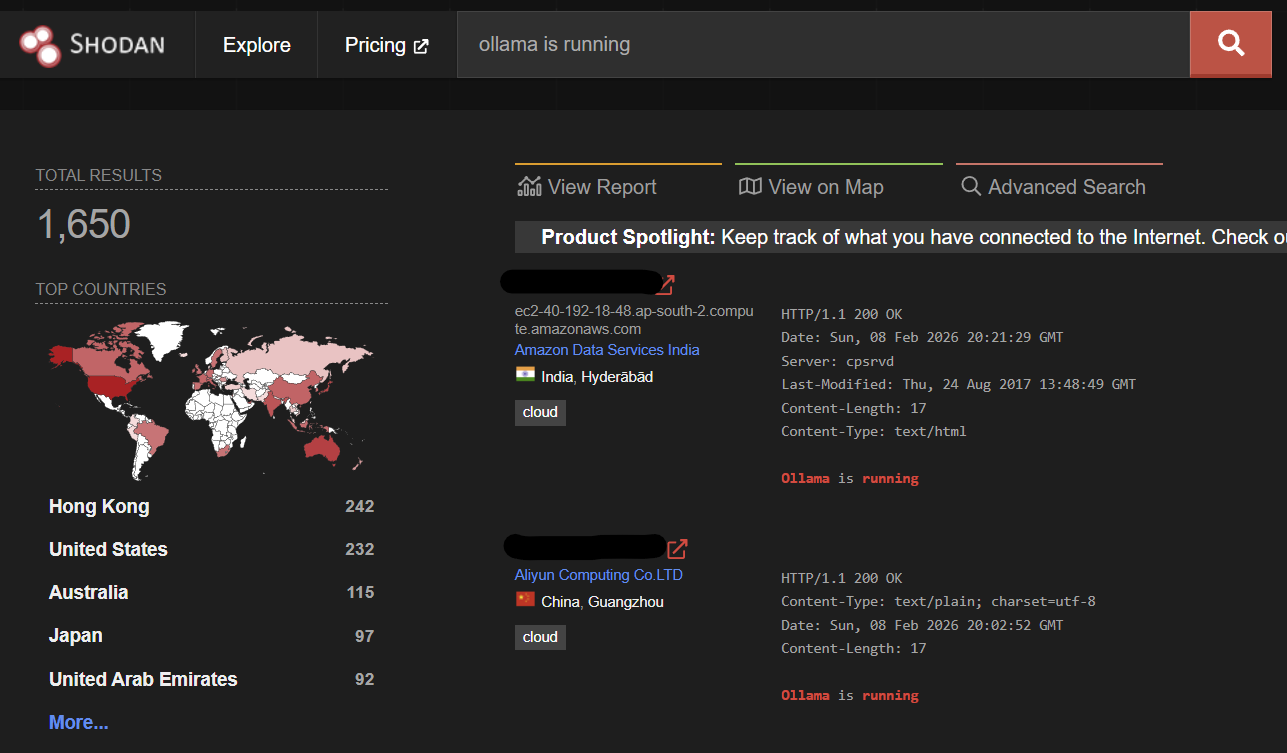

Turns out, yes, well, kinda, but that's a story for another time. However, while doing research, I came across a tool called Shodan.io. Shodan is a search engine for internet-connected devices. It allows you to search for specific types of devices, such as webcams, routers, servers, etc. It also allows you to search for devices based on their location, which is why it was useful to look for servers in antarctica.

Shodan scrapes the open web for devices, and then stores metadata about them in a database. You can query this with their API (which is fairly generous when you validate with .ac.uk emails). I had lots of fun poking around at different printers in Malaysia and car park webcams in Japan, but I wanted to try something ambitious.

So, I searched "ollama is running". What is ollama? well, it's a fairly new tool for people to run LLM's locally on their own servers and hardware. It's a great alternative to using the OpenAI api if you've got a semi-good gaming GPU lying about. You can download LLM's locally and then query your pc's HTTP API for inference

Ollama is running!

The thing with Ollama is that:

- It's fairly new, so they haven't added any authentication or security features yet. Therefore, if you find a server running Ollama, you can just send it requests to run LLM's on their hardware.

- Servers running it are easy to find, they all expose port 11434, which isn't a common port for anything else.

- Ollama is localhost-only by default, but a lot of people expose it to their web-apps to make it easier to use. In doing this, they make a big cybersecurity mistake, because now anyone can find their server and run LLM's on it.

Me on a duck at ICHack '26

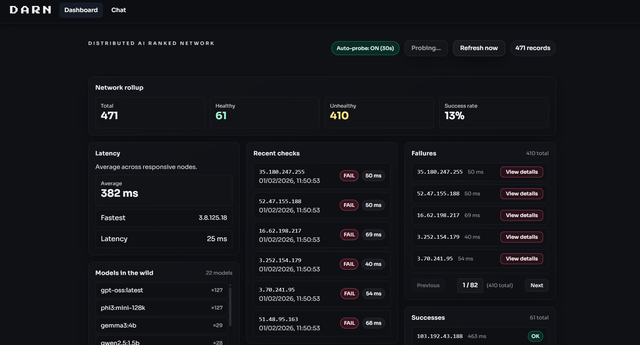

So, I made a team and we got together and wrote a UI that simplifies the process of using someone else's hardware to run LLM's. We called it DARN - Distributed AI Ranking Network. It's a bit of fun, but it also highlights the importance of cybersecurity and the potential risks of exposing services to the web without proper authentication and security measures in place.

First, it goes to shodan and gets a list of servers running ollama. Then, it sends requests to those servers to run LLM's on them. The results are then aggregated and ranked based on the quality of the responses, time taken for response and other metrics

Then, you can use a chatbox to send messages to the network, and it will send it to the highest ranked servers and get responses back.

DARN in action

I left it running and it found 1023 servers running Ollama around the world. Interestingly, only about 15% actaully gave me proper LLM responses. The others spewed out random token nonsense. I suspect the 85% were made by auto-provisioning services that misconfigured ollama on setup, but didn't get a conclusive answer on this.

So, yes, DARN. A distributed network of LLM's running on other people's hardware. Just make sure you secure Ollama! Thanks to my teammates MM and CH for their help making this come to life!